In order to gauge performance across a large number of variables, we defined how to use each component and locked its value when necessary for consistency. We tested the following render benchmarks:Īn instance on Google Cloud can be made up of almost any combination of CPU, GPU, RAM, and disk. V-Ray from ChaosGroup can take advantage of both CPU and GPU but performs different benchmarks depending on the accelerator, and therefore cannot be compared to each other.

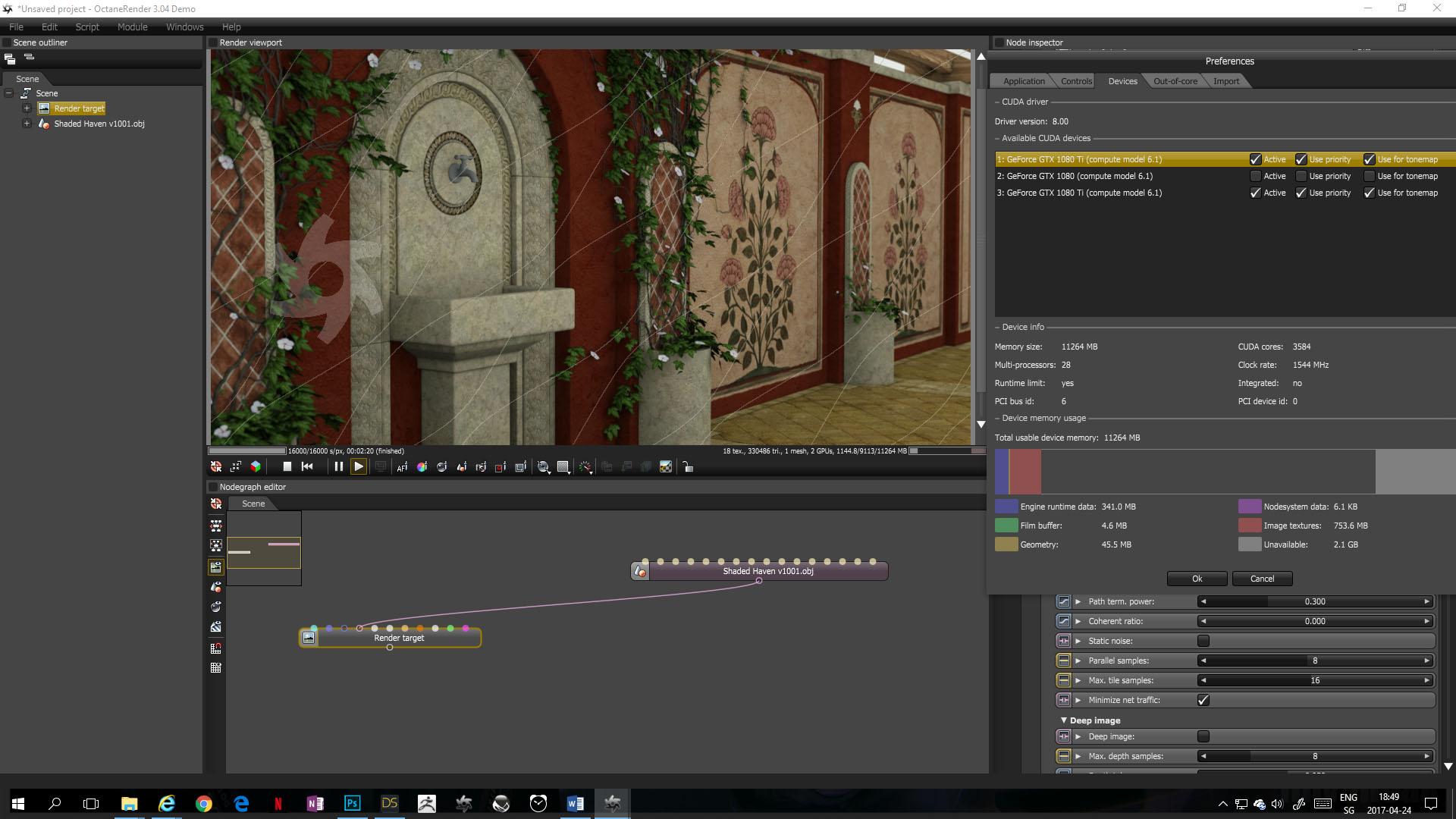

Octane render demo software#

For example, software such as Octane or Redshift cannot take advantage of CPU-only configurations as they're both GPU-native renderers. The amount of computations completed by the end of this time period provides the user with a benchmark score that can be compared to other benchmarks.īenchmarking software is subject to the limitations or features of the renderer on which they're based. Other benchmarking software such as V-Ray Bench examines how much work can be completed during a fixed amount of time. The faster the task completes, the higher the configuration is rated. Benchmarking software such as Blender Benchmark use job duration as their main metric the same task is run for each benchmark no matter the configuration. Render benchmark software is typically provided as a standalone executable containing everything necessary to run the benchmark: a license-free version of the rendering software itself, the scene or scenes to render, and supporting files are all bundled in a single executable that can be run either interactively or from a command line.īenchmarks can be useful for determining the performance capabilities of your configuration when compared to other posted results. You may want to run benchmarks with your own scene data within your own cloud environment to fully understand how to take advantage of the flexibility of cloud resources. Note: Benchmarking of any render software is inherently biased towards the scene data included with the software and the settings chosen by the benchmark author. You can see a list of the software we used in the table below, and learn more about each in Examining the benchmarks. The render benchmarking software we used is freely-available from a variety of vendors. We ran benchmarks for popular rendering software across all CPU and GPU platforms, across all machine type configurations to determine the performance metrics of each. This article examines the performance of different rendering software on Compute Engine instances. How can you tell if your workload would benefit from a new product available on Google Cloud? You can define each individual resource to complete a task within a certain time, or within a certain budget.īut as new CPU and GPU platforms are introduced or prices change, this calculation can become more complex. With the flexibility of cloud, you can right-size your resources to match your workload. To learn more about comparing on-premises hardware to cloud resources, see the reference article Resource mappings from on-premises hardware to Google Cloud.

While this may be a good starting point, the performance of a physical render server is rarely equivalent to a VM running on a public cloud with a similar configuration. When gauging render performance on the cloud, customers sometimes reproduce their on-premises render worker configurations by building a virtual machine (VM) with the same number of CPU cores, processor frequency, memory, and GPU. To learn more about deploying rendering jobs to Google Cloud, see Building a Hybrid Render Farm.

When faced with a looming deadline, these customers can leverage cloud resources to temporarily expand their fleet of render servers to help complete work within a given timeframe, a process known as burst rendering.

For our customers who regularly perform rendering workloads such as animation or visual effects studios, there is a fixed amount of time to deliver a project.

0 kommentar(er)

0 kommentar(er)